The year 2025 finds us no longer on the cusp of an AI revolution, but fully immersed in it. By the end of the decade, there will be two kinds of companies: (1) Those who fully utilize AI; and, (2) Those who are equipped to deal with a world that no longer exists. The transformative power of AI, however, comes with inherent risks. Algorithmic bias, the spectre of data breaches, and the complexities of evolving regulatory landscapes demand a robust and proactive response. A solid Governance, Risk, and Compliance (GRC) framework is not merely advisable, it’s an organizational imperative to manage AI effectively, foster responsible innovation, and mitigate potential harms. We know from experience that complex challenges require structured approaches and AI is no exception. We must move beyond reactive measures and embrace proactive governance, establishing clear policies, defining responsibilities, and cultivating a culture of ethical AI development.

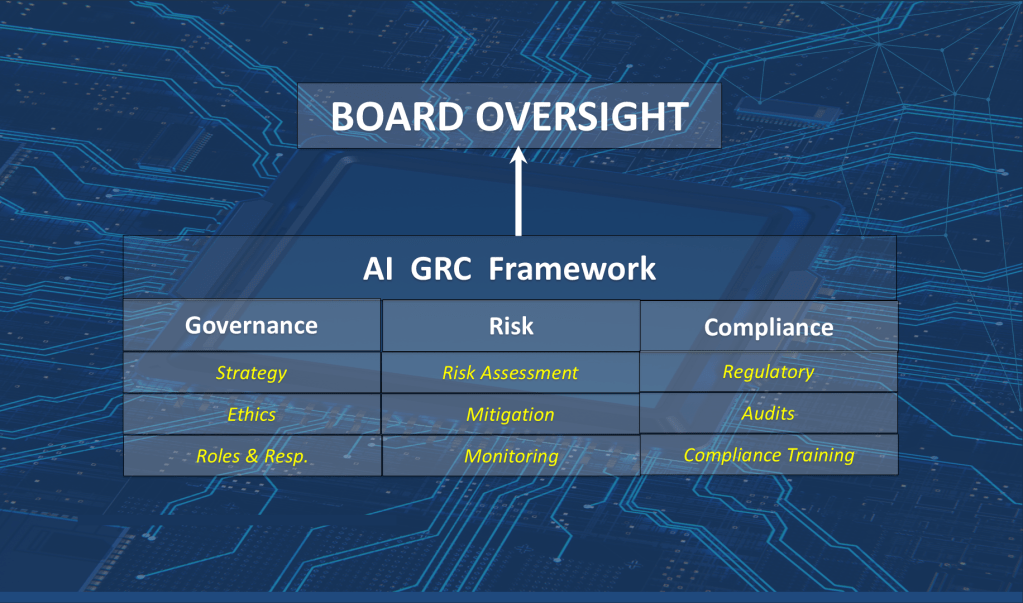

The GRC Imperative for AI The need for rapid integration of AI across industries necessitates a holistic GRC approach –

Governance sets the strategic direction, establishes clear policies, defines responsibilities, and fosters a culture of ethical AI development Risk management is about identifying and mitigating potential AI-related harms safeguarding the organization and its stakeholders Compliance is key to be able to stay ahead of the curve ensuring adherence to AI regulations, laws and ethical guidelines

Unpacking GRC in the Context of AI Let’s delve into the core components of GRC in the context of managing AI in organizations.

Governance (G): Effective AI governance demands a clear and structured approach, establishing the framework for responsible AI development and deployment. This begins with the formation of an AI Governance Committee, comprising diverse stakeholders, including ethical experts, legal counsel, and technical specialists. This committee is responsible for setting the strategic direction, defining roles and responsibilities, and ensuring alignment with organizational values. Policies and procedures must be meticulously documented, covering critical aspects such as data usage, algorithmic transparency, and accountability. Top-down commitment is essential – the board must be actively engaged in overseeing AI initiatives ensuring these align with the organization’s strategic goals and risk appetite. Leaders must champion a culture of ethical AI, fostering awareness and understanding across the organization through comprehensive training on AI ethics, data privacy, and regulatory compliance. Regular reviews and updates are crucial to adapt to the rapidly evolving AI landscape. Furthermore, establishing clear decision-making processes is paramount, ensuring that AI-related decisions are made with due consideration of ethical and societal implications.

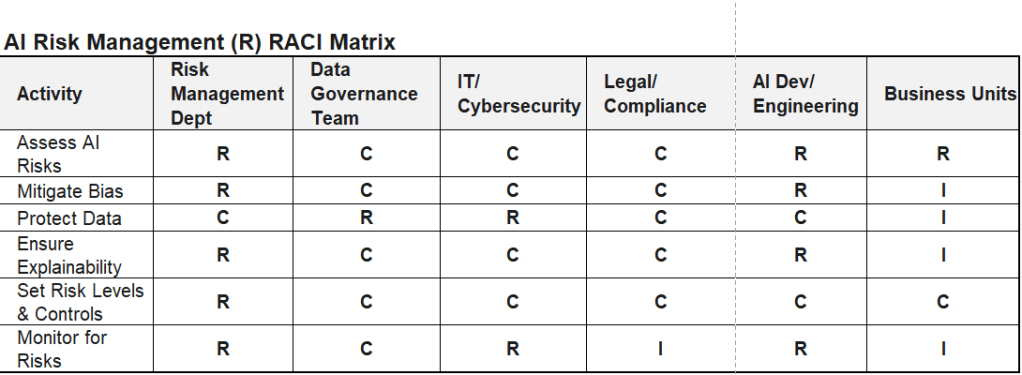

Risk Management (R): AI risk management is a dynamic and ongoing process that requires a proactive and vigilant approach. It begins with a comprehensive AI risk assessment, which should consider the multifaceted risks of algorithmic bias, data privacy breaches, and potential misuse. These assessments must be conducted periodically and, crucially, when major events (eg. the advent of chatGPT that rapidly democratized AI) or changes in AI systems occur, ensuring they remain current and relevant. Mitigating algorithmic bias is a critical concern, particularly in sensitive areas like recruitment and lending. Data privacy and security risks are also paramount, necessitating the implementation of robust data governance and security measures. Transparency and explainability are essential pillars for building trust in AI systems. Establishing clear risk tolerance levels and implementing appropriate controls is crucial for managing AI risks effectively. Scenario planning and stress testing can equip organizations to anticipate potential AI-related risks and develop robust contingency plans. Moreover, as regulations and ethical guidelines evolve, organizations must continually adapt their risk management practices to maintain compliance and uphold ethical standards.

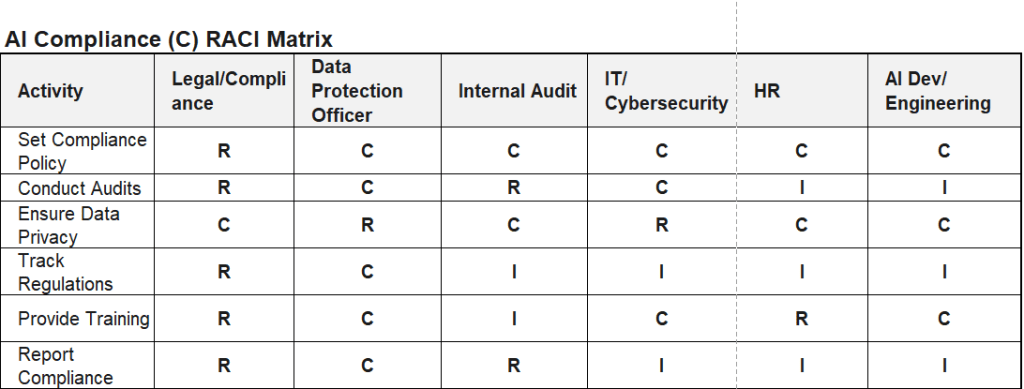

Compliance (C): In the AI era, effective compliance demands a deep and nuanced understanding of the evolving regulatory landscape. It’s about ensuring that AI systems adhere to relevant laws, regulations, and ethical guidelines. Organizations must stay abreast of the latest regulatory developments and proactively adapt their compliance strategies. This includes navigating the complexities of data privacy regulations like GDPR and the emergence of AI-specific regulations. Regular audits and assessments are essential for verifying and ensuring ongoing compliance with AI-related regulations. Compliance training and awareness programs are crucial tools for educating employees about their responsibilities and fostering a culture of compliance. Establishing clear lines of communication with regulators and stakeholders is paramount for building trust and ensuring compliance. Furthermore, meticulous compliance reporting and documentation are not merely bureaucratic exercises but crucial for demonstrating adherence to regulatory requirements and building stakeholder confidence. Compliance, therefore, is not simply about avoiding penalties, it’s about upholding ethical standards, fostering trust, and ensuring the responsible adoption of AI.

GRC in Action: RACI Managing AI effectively can be an overwhelming task, given the complexity of the regulatory landscape and the rapid pace of technological change. A clear assignment of responsibility and accountability is therefore essential. The RACI matrices below provided offer a representative framework and a good starting point for delineating these roles across Governance, Risk, and Compliance functions. These will need to be modified depending on the industry, size, nature and AI maturity of the organization.

Conclusion: The GRC approach above provides the necessary framework for organizations to navigate the AI frontier with confidence. By establishing robust governance, proactively managing risks, and ensuring compliance, organizations can build a solid foundation for responsible AI innovation. This framework empowers leaders and the workforce to boldly undertake AI-driven transformations, unlocking the immense potential of AI while safeguarding the interests of all stakeholders. The end game is for organizations to remain competitive and avoid disruption by more agile competitors.

Thank you for sharing the insights Sir. Agree that a well-integrated GRC approach ensures AI is not only powerful, but also safe, fair, and accountable.

One example I had read recently for an Indian school that tried using AI tool to automatically grade written essays in internal school exams.

Based on the data the AI was trained on, the AI tool favored essays written in perfect English and penalized students who used regional expressions or grammar variations, even if their ideas were strong.

It failed to understand context, creativity, or emotion, which human evaluators value.

Rural and non-native English-speaking students were unfairly scored lower. It discouraged creativity and critical thinking. As a result, students started loosing trust in the evaluation system. So, a well-integrated GRC approach ensures AI is not only powerful, but also safe, fair, and accountable.

LikeLiked by 2 people

Thanks Angad for your thoughts. I agree the use case you have written about is totally unacceptable. And a proper governance mechanism may have prevented this. Perhaps the POC was not done on the right representative group – empathy is going to be critical for AI to succeed in countries like India where our leaders want to replicate the kind of pan-India success we had with the India Stack.

LikeLiked by 1 person