Thinking ≠ Theory of Mind

Let’s start with something basic — but deeply human.

We humans just get that other people have thoughts, feelings, beliefs, and intentions — which may be wildly different from our own. This ability is called Theory of Mind (ToM). It’s how we sense when someone’s upset even if they say, “I’m fine.” It’s how we pick up on sarcasm, irony, and all the delicious messiness of human interaction 😊.

By the age of four or five, most children begin to develop ToM. Before that, they assume everyone sees the world exactly as they do. You’ve probably played hide-and-seek with a toddler and seen them cover their eyes and believe they can’t be seen. Cute, isn’t it?

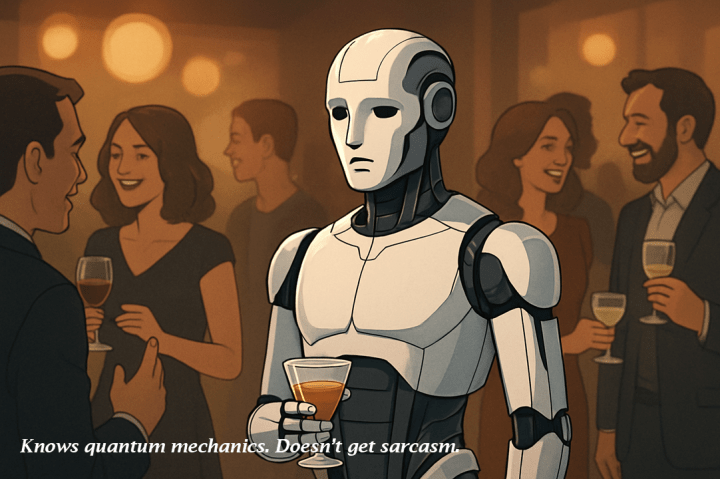

Here’s the kicker : many of today’s smartest AIs – with all their horsepower — behave in a shockingly similar way.

They’re incredibly intelligent …But still “mind-blind.”

AI Can Think. But Can It Understand You?

AI today is incredibly impressive. It can:

✔️ Solve problems

✔️ Simulate emotion

✔️ Reason at lightning speed

✔️ Even do poetry like Shelley (well, sometimes)

But here’s what most AI can’t do:

👉 Realize you have thoughts and beliefs that are not on the screen.

👉 Understand what you meant instead of what you said.

👉 Sense that pause in your voice, that tension in your “I’m fine,” or the little white lie you told to spare someone’s feelings.

No, this is not just a philosophical itch. It MATTERS.

Without Theory of Mind, AI Can…

❌ Misinterpret sarcasm

❌ Take things too literally

❌ Miss the emotional subtext

❌ Make odd or even harmful decisions without realizing it

It’s like talking to someone super-smart who just… doesn’t get you. ( think Sheldon from The Big Bang Theory). And that’s the gap — AI can compute, but can it connect?

Here’s the Difference: Humans Live It. AI Learns It.

We develop Theory of Mind through:

- Real-life interactions

- Emotional feedback

- Trial, error, and empathy

AI, on the other hand, learns from data. Not birthdays or love-at-fist-sights or heartbreaks . Not awkward silences or knowing glances. It analyses, but it doesn’t live. It simulates empathy, but doesn’t feel it.

Some exciting research is underway — like DeepMind’s ToMnet, and the belief-reasoning tasks GPT-4 performs decently on. Because, let’s be honest — passing a lab test on false beliefs isn’t the same as understanding why your friend is upset over a text you didn’t send !

So Where Does That Leave Us?

We may eventually build machines that think faster, better, more rationally than we do. But…

Computation ≠ Connection

As human + AI teams become the norm, one thing becomes clear: unless AI truly understands us — unless it gets what it means to be human — it will remain a brilliant outsider. And when that happens, interpersonal dynamics can get… well, messy. Awkward. Even risky.

(Interesting topic for an HR thought paper, no? 😊)

Because until AI can really “walk in our shoes,” it will only mimic connection — not experience it. Will AI ever truly “get” us? Or will it always be that clever kid at the party — great with facts, but missing the vibe?

Would love to hear how you see it.